Telepathy is a concept usually associated with science fiction. The idea of reading an individual’s thoughts or interacting with them without words seems preposterous, and yet it captivates us because it suggests the possibility of instantaneous, unhindered communication. More often tied to pop culture icons like Star Trek than actual science, telepathy is a truly fascinating concept that probes the boundaries of what the brain might actually be capable of. However, what’s known as “synthetic telepathy,” or the ability for two brains to communicate with each other using a computer as an intermediary, may not be as far off as it seems. In fact, researchers are currently working on technologies that may one day bring us closer to achieving this goal.

Foundational Technology: The brain computer interface

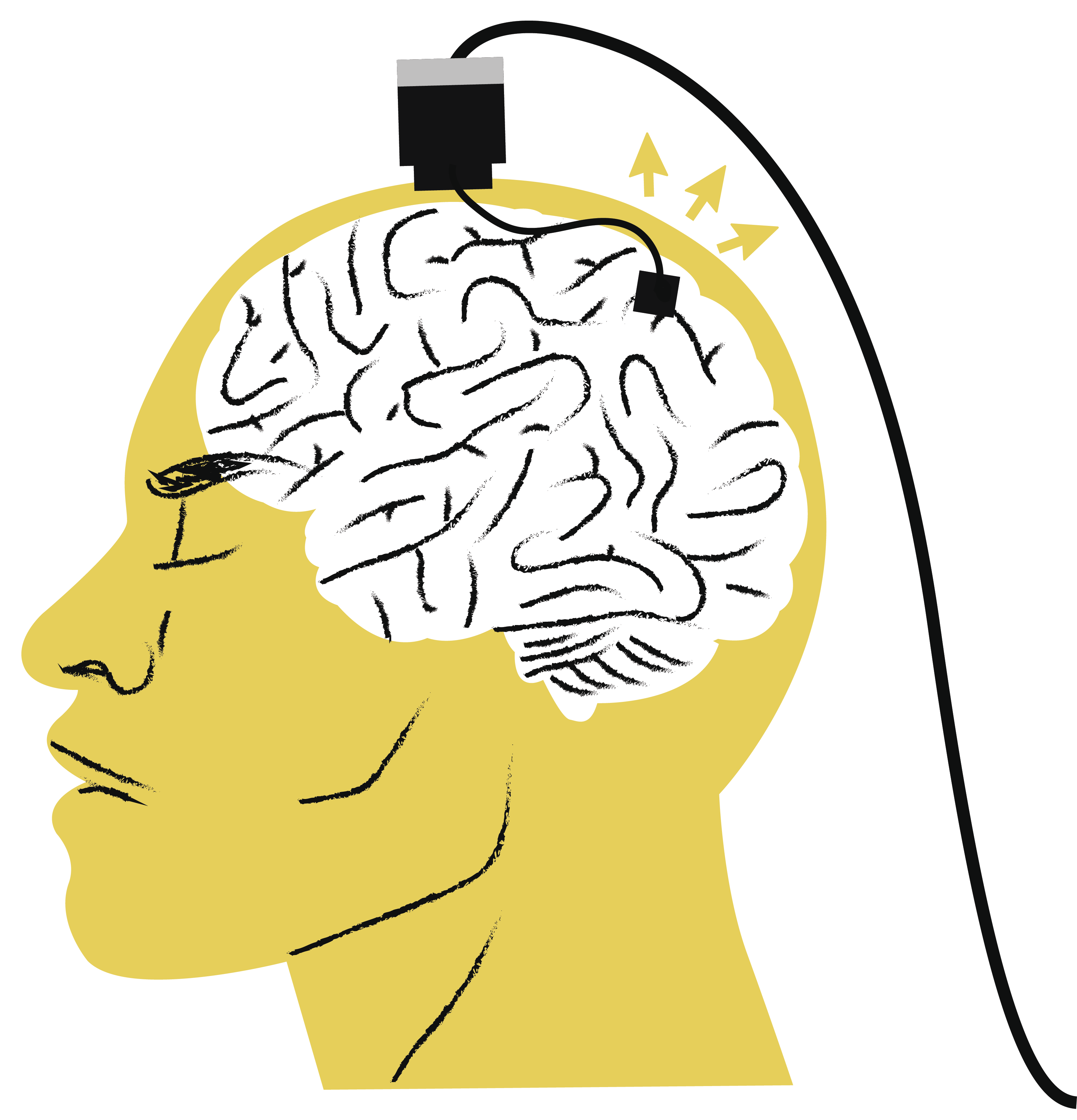

The current foundational technology for potential synthetic communication is the brain-computer interface (BCI). As the name suggests, this machine is a link between a brain and a computer, allowing the brain’s neural signals to be read and processed by a computer. In order for neural signals to control an external machine or computer, they must first be recorded and translated into a relevant computational code. This is often achieved through recording wires implanted in the brain, or recording electrodes that sit either on the surface of the brain or the surface of the scalp. These recordings are then converted to a digital signal and processed in a variety of available commercial or custom software programs that clean and filter the data before putting it to use. The earliest BCIs utilized the electroencephalogram (EEG) to noninvasively record brain activity from the surface of the scalp. Jacques Vidal, considered the earliest BCI researcher, observed that external stimuli (such as light flashes or mechanical sensation) created waves of activity that could be recorded and discriminated from ongoing brain activity and used to control computer cursors [1]. Since then, the original BCI concept has been expanded upon and applied to a variety of scenarios.

Virtual Motor Prostheses and Artificial Sensory Experiences

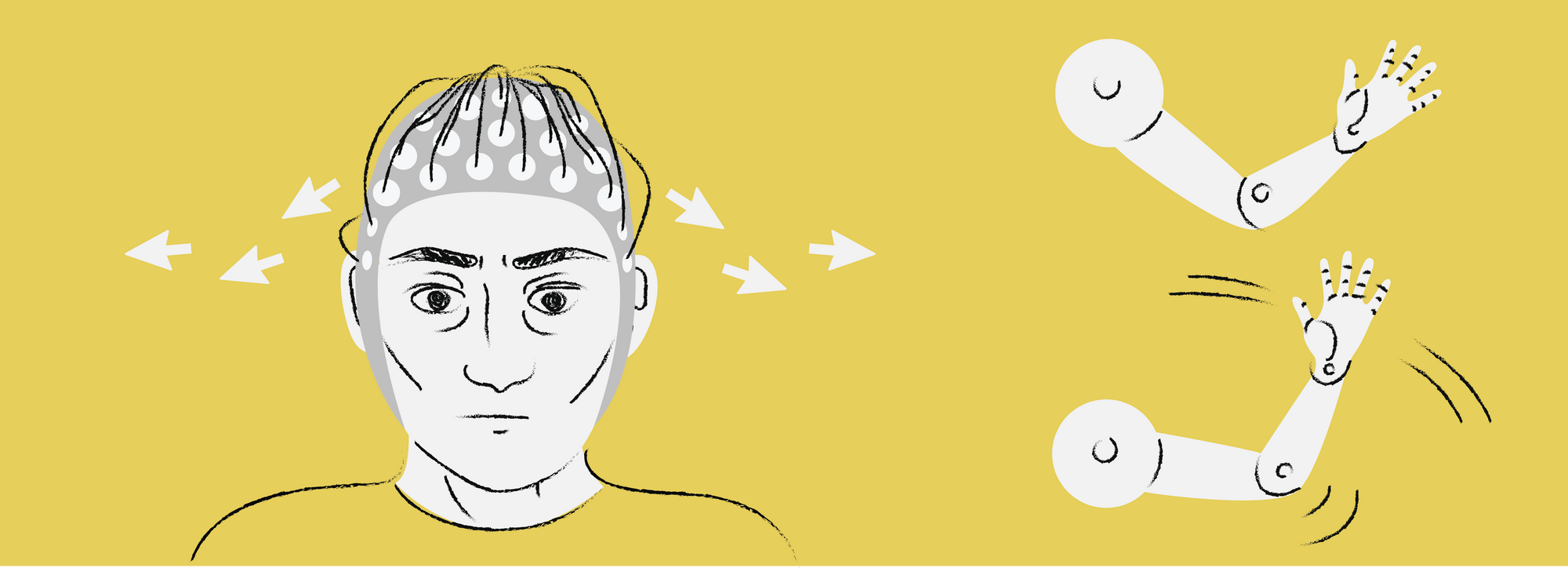

Much of recent BCI research has focused on the development of techniques that transfer information between mind and machine to replace or enhance motor and sensory signals. A study by Carmena et al. demonstrated that monkeys can be trained to control a robotic arm with a BCI [2]. In the study, monkeys were implanted with microwires in the brain that read the electric signals from various frontal and parietal areas, and electromyographs recorded electric signals from muscles in the arm. During the first phase of the study, the monkey controlled the position of the cursor on a computer by physically moving a pole in a variety of ways: moving it across the table, keeping it stationary while varying grip force, or moving and then gripping the pole. During these trials, neural signals transmitted information regarding the monkey’s hand position, hand velocity, and grip force, and computer algorithms were optimized to capture this information. The study entered its second phase once training and optimization were complete, and the cursor switched from being “pole-controlled” to “brain-controlled”, meaning that the cursor was controlled with neural signals alone—the monkeys no longer needed the actual pole to move the cursor. They were eventually able to successfully complete three computer tasks without moving their arms at all—indicating success of the model in decoding neural signals, as well as the success of the training system and the ability of a monkey to learn a brain-controlled task [2]. Research has since taken one step further from this early study to develop brain-computer-brain interfaces (or BCBIs), which involve an individual not only delivering information to a computer but also the delivery of feedback directly to the user’s brain.

A study by O’Doherty et al. successfully used BCBIs to deliver sensory information directly to the brains of two monkeys [3]. In this experiment, the monkeys were implanted with microwire arrays in both their primary motor (M1) and somatosensory cortices (S1). In a setup similar to that of the previous study, the monkeys first participated in a series of hand control trials, involving the use of a joystick to directly manipulate a cursor on a computer monitor. They were presented with several different objects on the screen that looked identical but contained different artificial textures. These textures were entirely virtual, but were presented to the subject in the form of stimulation, specifically intracortical microstimulation (ICMS), to the primary somatosensory cortex. This technique delivers small, electrical pulses of different frequencies to the brain and was used in this study to simulate a variety of different textures. The monkeys were rewarded when they successfully selected one of the three textures and held the cursor over it. In the second set of trials, the joystick was either removed or disconnected and the monkeys learned to use their neural signals alone to move the cursor and select different textures. Interestingly, the study found that the monkeys were in fact able to accurately discriminate between these artificial textures. While discriminating between real, physical textures and virtual textures isn’t quite the same, this study’s findings are still a huge first step for our ability to computerize more complex aspects of sensory experience. The researchers then went a step further and displayed a realistic virtual monkey arm on the screen (instead of a cursor) that could be manipulated via brain-control to select the different textures. The monkeys were able to control this hand in first person, effectively controlling a virtual prosthesis with their minds and receiving artificial feedback in the form of cortical stimulation in the closest possible analogue to controlling a real arm [3]. This kind of virtual prosthesis has been extended for the human application of neuromotor prostheses (NMPs) in humans.

NMPs, or prostheses controlled directly by the brain, were explored by Hochberg et. al in a study that focused on a tetraplegic man named MN [4]. MN was implanted with a sensor known as BrainGate, which is designed to measure the neural activity of certain motor areas of the brain and convert it to computer commands. The sensor was implanted into MN’s right precentral gyrus, the portion of the brain corresponding to motor activity in the left hand. Patient MN was instructed to imagine performing several different actions relating to moving a computer mouse, and his neuronal activity was measured. He was then able to move a neural cursor around a screen. Using these skills, MN was able to open email, play basic computer games, and change the volume on a TV simply by using his decoded neuronal activity and software interfaces. He was also able to focus on a prosthetic hand to open and close it, as well as use a robotic arm to grasp and move an object. Most notably, MN was able to perform these actions rapidly and while maintaining conversation, showing that the task of using the prosthetic did not impede other function [4].

The implications of this prosthetic study are very promising, especially for those who face permanent paralysis due to devastating injuries of the central nervous systems. MN required only the implantation of the BrainGate, a chip significantly smaller than a penny, in order to connect with the prosthesis. Although in many of the studies discussed above monkeys required screen intermediaries in order to effectively control the robot arm, MN was able to effectively learn to do so without the visual feedback component [4]. These prosthetics could allow people to write, grasp objects, and perform many everyday actions that they might not be able to otherwise. However, they do not yet allow for the integration of sensory feedback. Technologies such as the BCBI that provide artificial sensation still need to be refined further before they can be used in human subjects. This next step, however, is necessary in order for users to gain full, bidirectional control of a prosthetic. If technologies such as the BrainGate and the BCBI used in monkeys could be merged, someone with a prosthetic limb could not only grasp objects, but also feel their texture, they would be able to achieve a much closer experience of a normal hand.

Transferring Information between Brains

Beyond brain to computer information transfer, researchers have also delved into the world of brain to brain information transfer. Facilitated by brain-to-brain interfaces (BTBIs), these studies attempt to connect the brains of two individuals and allow them to collaborate and transfer information.

One such study, conducted by Pais-Vieira et al., connected the brains of two rats in an attempt to promote collaboration on a task using their minds alone [5]. As previous research has shown, signals from the primary motor cortex can be decoded by a computer to drive certain tasks. However, the aim of this study was to use a biological brain as the decoder. First, the researchers set up two levers. They shined a laser light on the wall above one lever, and their first rat was trained to press the corresponding lever for a water reward. Similarly, the scientists made a window that could be changed to be either narrow or wide. The rat was trained to feel its width using its facial whiskers and then poke one of two doors if it was narrow, and the other if it was wide. While the first training was visual in nature, the second one was tactile, meaning that two tasks involved very different areas of the brain. The second rat (the decoder rat) was then taught to respond to electronic pulses in the brain. The rat was trained to respond to one of the levers or doors when multiple pulses were sent, and the other lever or door when only a single pulse was sent.

Researchers then began the so-called “collaborative trials”. The first rat responded as usual to the light or window width, and selected the corresponding lever or door. The rat’s neural activity was then recorded and converted into electronic pulses in real time—selecting the right lever or narrow door would create a short pulse, and selecting the left lever or wide door would create a long series of pulses. The decoder rat then selected the same lever or door based solely on this pulse information. If the second rat succeeded, both rats would receive a reward, which taught them to work as a team. These results were even successfully reproduced when the encoding rat was in Brazil and the decoding rat was in the USA, with no possible way for them to receive information except through brain signals transmitted through the Internet [6]. This study was instrumental in the field of synthetic telepathy—these two rats were able to successfully collaborate and share information purely brain-brain, with the only thing between them a computer.

A similar study conducted by Rao et al. here at the University of Washington instead focused on brain-to-brain information transfer between human beings—and resulted in the first known direct human brain connection [7]. In this experiment, one person (the “sender”) was sitting in front of a screen with an EEG cap that measured their brain impulses. They were trained, in a similar fashion as with earlier studies, to control the movement of a cursor by imagining hand movement. Afterwards, the subjects were introduced to a basic computer game. The game contained a tank with two types of targets overhead, either an enemy rocket or a supply plane. The objective was for the sender to neurally move the cursor to the “Fire” circle when the rocket was over the tank but not the supply plane. However, the sender lacked the ability to actually control the game. Instead, the second participant in the study (known as the “receiver) had a touchpad that was hooked up to the game’s controls, though the receiver’s screen was blank. In this way, the sender and receiver needed to work together in order to complete the game successfully, as only the sender could see when to fire but only the receiver could actually fire. The sender’s brain activity was first recorded through EEG, then transmitted to the receiver through a transcranial magnetic stimulation (TMS) coil placed over the receiver’s head. TMS-induced activity caused the receiver to move his hand and press the touchpad, thus firing in the game. Through this method, the receiver was then able to successfully play the game without looking at it [7].

While synthetic telepathy as science fiction imagines it is still very far off, modern research in brain-to-brain information transfer and brain-to-computer information transfer seems to suggest that a more pragmatic version of mental communication may someday become a reality. However, that’s not to say that this field isn’t faced with significant roadblocks; while we have a reasonable understanding of how information is processed in the primary motor and sensory cortices of the brain, we know significantly less about the parts of the brain associated with higher order functions like thinking and planning. However, this brain-to-brain connection research is still an important demonstration of the brain’s ability to transfer and receive information from a variety of sources, regardless of the complexity of information we can currently transfer.

The Future of the Digital Mind

These studies show us the immense untapped potential of the human brain to connect with computers and other brains to greatly extend its own capabilities. Unfortunately, we know very little about the real-world applications of these technologies, as they are still in their fledgling stages. These findings have already made great strides into the development of different motor paradigms, which range from the restoration or replacement of movement for those who are paralyzed to the possible augmentation of existing abilities. Expanding our knowledge of motor function could allow a worker who needed to lift a heavy object to simply use prosthetic heavy machinery. Beyond motor movement, the delivery of sensory information could lead to the creation of new types of prosthetics for those who are lacking certain sensory inputs, such as those who are deaf or blind.

BTBIs also have the potential to revolutionize communication—a more sophisticated understanding of the neural systems associated with communication could someday change the way we convey ideas and interact with other people. This change in communication could also alter other systems we take for granted, such as teaching, as teaching and learning require much more than simple communication. In such situations, brain-to-brain information transfer techniques may someday allow the teacher to simply send brain signals from his motor cortex to that of the student, physically and remotely guiding that student through the process and potentially make learning easier. While synthetic telepathy is certainly still in its infancy, this developing technology is exciting to consider and may someday change the way we interact with the world and each other.

References

- Vidal, J. J. (1973, June). Toward Direct Brain-Computer Communicatio [Electronic version]. Annual Review of Biophysics and Bioengineering, 2, 157-180. doi:10.1146/annurev.bb.02.060173.001105

- Carmena, J. M., Lebedev, M. A., Crist, R. E., O'Doherty, J. E., Santucci, D. M., Dimitrov, D. F., & Patil, P. G. (2003, October 13). Learning to Control a Brain-Machine Interface for Reaching and Grasping by Primates [Electronic version]. PLOS Biology. doi:10.1371/journal.pbio.0000042

- O'Doherty, J. E., Lebedev, M. A., Ifft, P. J., Zhuang, K. Z., Shokur, S., Bleuler, H., & Nicolelis, M. A. (2011, November 10). Active tactile exploration using a brain-machine-brain interface [Electronic version]. Nature, 479, 228-232. doi:10.1038/nature10489

- Hochberg, L. R., Serruya, M. D., Friehs, G. M., Mukand, J. A., Saleh, M., Caplan, A. H., & Branner, A. (2006, July 13). Neuronal ensemble control of prosthetic devices by a human with tetraplegia [Electronic version]. Nature, 442, 164-171. doi:10.1038/nature04970

- Pais-Vieira, M., Lebedev, M., Kunicki, C., Wang, J., & Nicolelis, M. A. (n.d.). A Brain-to-Brain Interface for Real-Time Sharing of Sensorimotor Information. N.p.: Scientific Reports.

- Yoo, S., Kim, H., Filandrianos, E., Taghados, S. J., & Park, S. (2013, April 3). Non-Invasive Brain-to-Brain Interface (BBI): Establishing Functional Links between Two Brains. PLOS One. doi:10.1371/journal.pone.0060410

- Rao RPN, Stocco A, Bryan M, Sarma D, Youngquist TM, Wu J, et al. (2014) A Direct Brain-to-Brain Interface in Humans. PLoS ONE 9(11): e111332. doi:10.1371/journal.pone.0111332