One of the most fundamental things separating humans from all other species on Earth is our ability to use language. While other living things may be able to communicate brief signals with various sounds or chemicals, the complexity and variability of human language is unique to our species alone. The capacity for language is often directly linked to the higher-order thinking that enables humans to create, evaluate, and analyze. Because of this, the study of how language is structured in the brain has been growing rapidly as technology advances [1] . The formal study of language began as far back as 200 years ago. Major language changes were spreading across the ancient world, and it was necessary for people to learn these changes in order to participate in the advancing society [2] . However, it has only been over the past few decades that scientists have had the technology needed to gain insight into the neurological basis of language [2] . The understanding of language processing in the brain is based on three crucial concepts: recognizing an incoming signal, decoding the signal, and ultimately establishing comprehension. Despite the recent blossoming of the field, there is still considerable debate over how exactly the brain goes from receiving input to understanding the message it carries.

The understanding of a phrase can be broken down into three major steps: accepting input information, processing the structure of the incoming information, and finally comprehending the meaning of the phrase [3] .

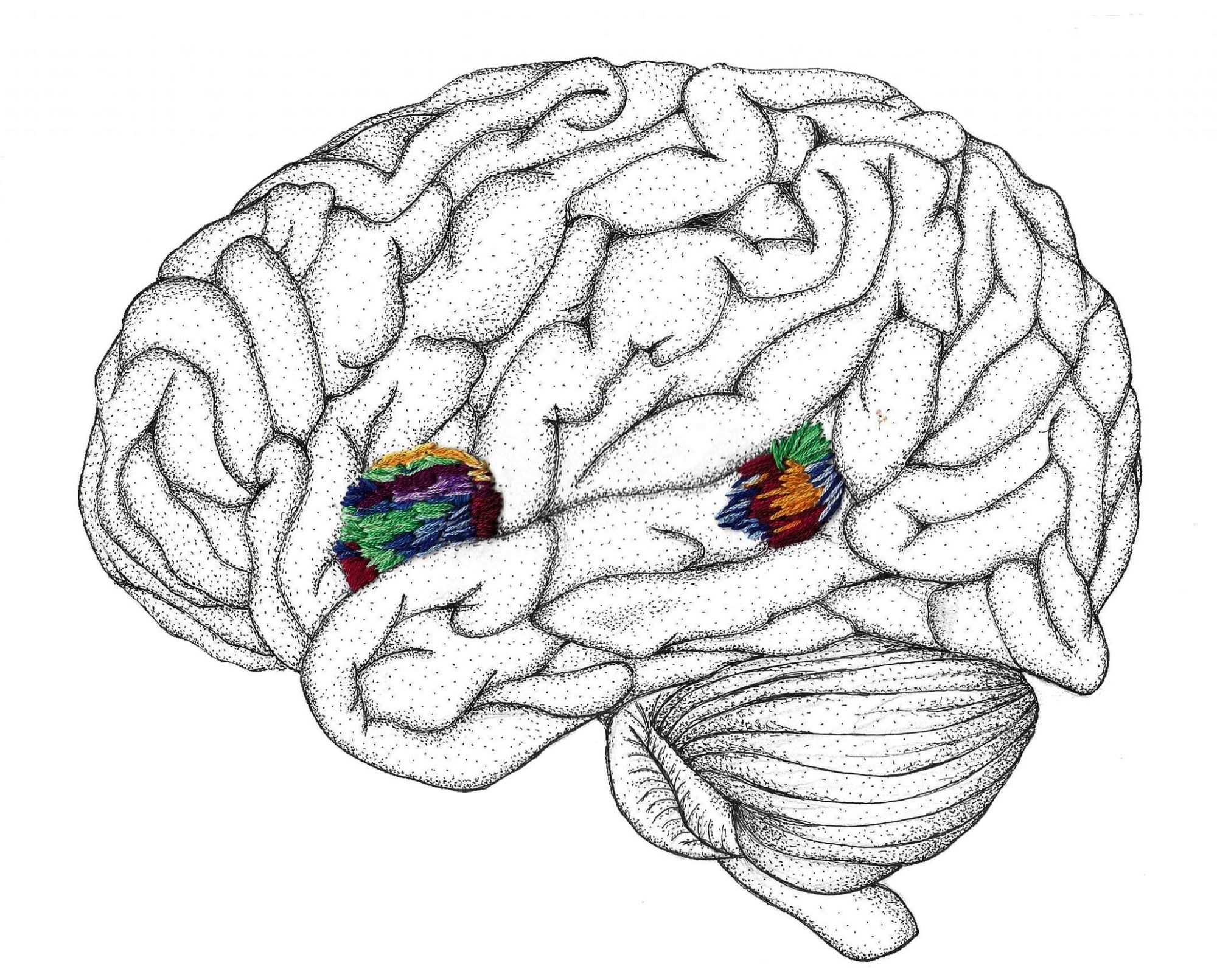

Each of these steps can in turn be broken down into several substeps within certain geographic locations in the brain. For spoken languages, the primary auditory cortices serve as the main regions for the first step of language processing by recognizing and accepting input information. Interestingly, while the auditory cortices are activated by speech and non-speech sounds alike, the areas of the brain relating to the last two steps of language processing are exclusively triggered by speech sounds. This suggests that there is a brain structure acting as some sort of filter that removes non-speech sounds from the system of language processing. It turns out that this filtration consists of a complex series of interactions between brain structures in and around the auditory cortices [3] .

When the brain first perceives any kind of sound, a part of the temporal lobe located in one of the most highly-developed parts of the human brain is activated [3] . Milliseconds after this region is stimulated, the auditory cortices in both the left and right hemisphere of the brain begin to show activity. Although acting in concert, the two hemispheres have actually been shown to function very differently [4] . The left auditory cortex reacts most strongly to speech sounds, while the right more accurately detects tonal and pitch differences across all types of sounds. This interaction is important because processing language requires both the detection of spoken words and an understanding of the intonation patterns that may include clues about their possible meanings. This can be illustrated in English when someone makes a non-question statement sound like a question by raising their pitch at the end of the sentence. The importance of tone can be demonstrated even further in actual tonal languages like Chinese where, for example, the word “ma” can mean “hemp plant” when said with a rising tone but “mother” when said with a flat tone. The two hemispheres of the brain work together to sort through this incoming auditory information before passing it on to the parts of the brain associated with syntactic (structure-based) and semantic (meaning-based) comprehension.

It was recently discovered that incoming auditory information follows two separate paths to the second area of language processing, which is also in the temporal lobe [5] . When any sort of complex sound with varying pitches and contours is heard, the first path activates.

These types of sounds are defined as any sound complex enough to possibly be interpreted as speech. Because spoken languages contain a multitude of varying sound signals, this area is not activated by singular sounds, but only by more complicated combinations [6] . The second pathway activates only when the incoming speech signals are intelligible to the listener [5] . This is related to how you can recognize when a foreign language is being spoken despite not knowing all of the sounds in the foreign inventory, but when you hear your own language you hear the separation of words and can comprehend the meanings effortlessly, almost subconsciously. That is because the two types of information travel through different pathways in the brain. This difference suggests that perhaps there is a brain structure acting as a short-term memory bank for all phonetic stimuli and another that responds specifically to information the listener recognizes and comprehends [6] .

In the case of signed languages, the input information does not consist of auditory signals. Instead, when signers receive an incoming linguistic stimulus, it first activates their primary visual cortex, located in the back of the brain [7] . Intriguingly, in the same way that a hearing person’s brain filters through speech and non-speech sounds, signers show different right-side brain activity when seeing a gesture and seeing a sign [8] . For example, if someone were to pantomime the action of sweeping the floor, it would elicit different brain activity than the signed sentence “I am sweeping the floor” in the receiving signer’s brain. This implies the same sort of linguistic filter posited by those who study spoken languages [9] . Although it is suspected that two separate pathways are also responsible for the filtration in signed languages, the research on linguistic processes in the deaf brain is still in its infancy, although rapidly advancing [7] .

Because sign language relies on the visual world, there many characteristics unique to sign languages that are not present in spoken languages, but the similarities reach far deeper. When first exploring sign language in the brain, researchers turned to the right hemisphere due to its role in visuospatial processes like facial recognition. Several studies found that the rear right hemisphere was indeed activated by the presence of incoming signed input [8] . A couple studies then compared this highlighted area in native American Sign Language (ASL) users and people who learned ASL later in life. Interestingly, they found the same area of the right hemisphere to be significantly more active in the native users who learned sign language before a certain age. The age cutoff for this brain activity aligns exactly with the critical period for language learning that had previously only been defined for hearing people [8] . This research implicates this portion of the right hemisphere in the first step of signed language processing: accepting input information. But after this step, the same neurological areas activate in deaf and hearing people alike, implying a shared system for processing structural and meaning-based information [7] .

The syntactic and semantic levels of signed language processing are nearly identical to those seen in the brains of people using spoken language. The differences that are occasionally found usually trace back to the physical modality of signing, or how signed languages consist of visual linguistic input, necessitating the right hemisphere’s visuospatial involvement [7] . It was actually the similarities between spoken and signed languages regarding left-hemisphere brain activity that helped disprove the misconception that sign languages are merely a series of complex gestures [10] .

In actuality, signed languages possess all of the same syntactic complexities and rules that define spoken languages and thus share a lot of the neural pathways involved with language processing after the initial input step. Once information from the visual or auditory input has been delivered to the left-side temporal lobe, the brain begins the modality-independent part of language processing: decoding the structure of the incoming phrase [9] .

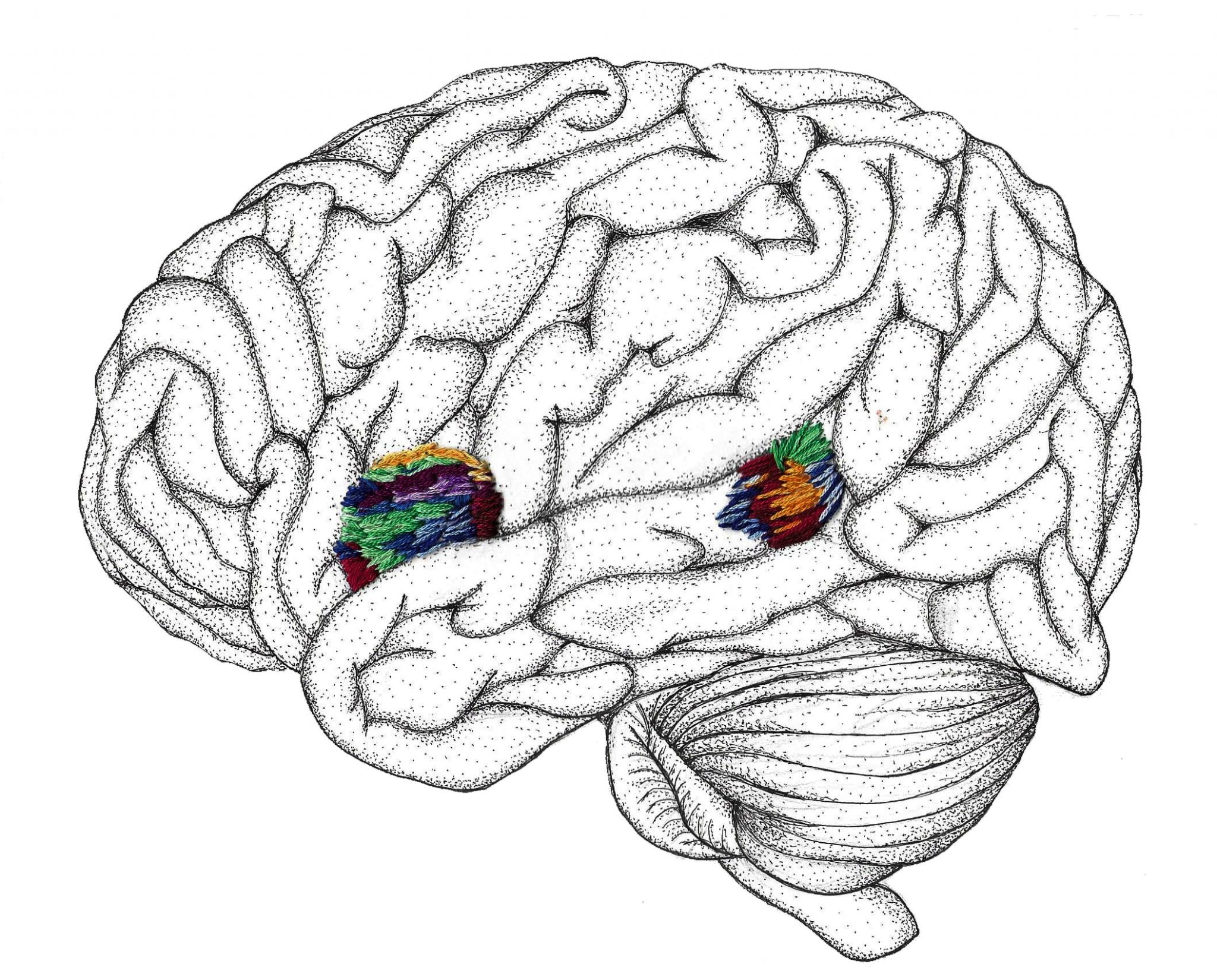

Currently there are many hypotheses concerning how the brain is able to sort through and deconstruct sentence structure, likely due to the staggering array of possible structures and rule combinations the human species is capable of mastering. There are about 7,000 languages being used currently, and although certain general structures are common, each language has its own intricacies [11] . Linguists define sentence structure based on where the crucial components of the sentence—subject, verb, and object—must appear. English is an SVO (subject, verb, object) language because sentences in English must be built according to this general structure. For example, “She loves him” (SVO) would be considered a correct statement to an English speaker, but “Him she loves” (OSV) would seem strange and ungrammatical. There is at least one language in use today to account for nearly every possible combination of structure rules, and some languages with no well-defined word order at all. Yet each community is linguistically developed and can communicate equally well. Word order is thus a common feature of language that can lead to different syntactic structures with the same semantic interpretations [12] . In order to fully comprehend phrases, then, the human brain must somehow be able to assign meaning to each word in the phrase in a way that makes sense syntactically. The region of the temporal lobe to which the auditory and visual cortices deliver information is currently being targeted for the investigation of this phenomenon.

One leading hypothesis to explain the phenomenon is that shortly after processing the auditory or visual information, the brain begins decoding the phrase structure by assigning each word in the phrase to a category. These categories consist of the basic word-category distinctions (nouns, verbs, adjectives, etc.). The near-immediate next step is to reveal the subtler relationships between the words (i.e. which adjective describes which noun). Scientists have been able to observe separate brain activity during these two distinct phases of language comprehension using two main kinds of experiments. The first type of experiment involves comparing brain activity when people are given syntactically valid sentences versus sentences with incorrect word orders [13] . For example, the person could be presented with two sentences: “the boy runs” and “the runs boy.” The second phrase would be considered ungrammatical because the word “runs” does not work as an adjective when English word-order rules are applied. This can also be extended to signed languages, which rely on facial expressions and subtle head movements to establish grammatical function. In this case, scientists present deaf signers with a sign accompanied by a head movement or facial expression that is not normally there, effectively interrupting the same syntactic assignment process [14] . By incorrectly using a word, researchers are able to interrupt the first part of sentence parsing, the word-category assignment, and observe the resulting effect on the word-relationship assignment [3] . This type of experiment shows especially increased activity in the top part of the temporal lobe, which hints at the region’s involvement in sorting out initial syntactic relationships.

The second type of experiment used to study the decoding of phrase structure involves the use of artificial grammar. In these experiments, people learn a new set of grammar rules designed by researchers, and then are tested on how well they can apply them. This process lets researchers gain insight into the areas of the brain concerned with phrase-structure rule assignments. At the same time, researchers are able to study syntactic functions without the influence of word meaning. The participants’ ability to successfully process made-up structure rules lets researchers deduce whether sentence structure and word meaning are stored separately in the brain. Certain artificial grammar experiments like these highlight activation in the same top part of the temporal lobe but also show increased brain activity in the frontal lobe. Nearly all neurological research exploring the sorting of language supports the idea that sentences are first parsed into categories and then almost simultaneously organized into a sort of tree that delineates the words’ relationships to each other [3] .

After the brain takes in the auditory or visual information and processes the phrase’s structure, all in a matter of milliseconds, it begins the third step of language processing: integrating the multiple levels of information into one coherent message based on word meaning and usage. Over the past 15 years, research into the neurolinguistic separation between syntactic and semantic processes has split into several categories. These categories revolve around the idea that all information processed up until this point needs to somehow be combined. This unsurprisingly means that the majority of the previously discussed neural regions play a role during the integration process [3] . The most current model suggests that the temporal lobe can be broken into two parts, one remembering the structure of the incoming phrasal information and the other dealing with any ambiguity that could be present in the sentence. This separation is based on activation in the front part of the temporal lobe that is unaffected by fake words as long as the overall structure is correct. For example, in the sentence “the linkish dropner borked quastically,” none of the words are real words. But an English speaker is still able to identify which word is functioning as the noun, verb, etc., which alone lets them determine if the phrase is grammatical or not. This again demonstrates that syntax is processed separately from word meaning. The back part of the temporal lobe is the neural region observed to be increasingly active as the ambiguity of the phrase increases [3] .

Ambiguity can arise when words are used grammatically but have many possible correct meanings. This phenomenon is responsible for making certain phrases funny. The joke, “How do you make a turtle fast? Stop feeding it.” is humorous because the word “fast” is acting in an ambiguous way. In this context, the word “fast” could mean speed but is later revealed to mean lack of food. As ambiguity increases, so does activity in the posterior area of the temporal lobe. This region also shows increased activity with increasing complexity of verbs and number of prepositional phrases (for example, “the dog barked” versus “the dog jumped and barked from the porch at the cat in the tree above”) [3] . Other studies unrelated to language have demonstrated the latter region of the temporal lobe is involved with several other types of information integration, from audiovisual stimuli to face recognition and more, which means its implication in language processing is well-supported [15] .

In addition to the temporal lobe, the frontal lobe has been shown to play a major role in the third step of phrase processing (the combination of syntactic and meaning-based information to form a complete message) [3] . Broca’s area, a region in the frontal lobe, has been particularly interesting to scientists trying to narrow down how the brain accomplishes such a complex process. Researchers have been able to make a direct link between Broca’s area and several of the most important language processing steps. One well-established link exists between Broca’s area and syntactic complexity [16] .

Broca’s area, like the posterior temporal lobe, also shows increased activity as sentences get increasingly complex and more difficult to process. This makes sense when considering symptoms of damage to Broca’s area, wherein people lose their advanced language skills but retain comprehension of much simpler phrases and word meanings [16] .

Other studies have also found Broca’s region to be highly recruited when people were asked to learn artificial grammar rules, suggesting that it plays a role not only in using, but also acquiring, complex language structures. Both of these ideas are backed by research that demonstrates that Broca’s area may be involved in sequence processing more generally. This is supported by research that suggests that Broca’s area may contribute to a person’s working memory, defined as the short-term storage of information for relatively immediate use [17] . It would make sense that to process a complex sentence a person would need to store some amount of information while processing the rest, but scientists have found contradictory evidence on this basis. Some patients with brain injury demonstrated a link between working memory capacity and complex sentence comprehension while others did not. So, it is possible that Broca’s area is involved with sentence comprehension, but it is also likely that other areas of the brain are involved in ways yet to be discovered [17] . Because neurolinguistic research is currently experiencing rapid evolution, the discovery of each of these regions’ involvements is really just the tip of the iceberg.

The study of language is an ironic concept at its core in that we need to use language to study language. It has long been proposed that the very things that make us human are fundamentally based on language. Thinking about things in complex and analytical ways has enabled humans to develop the many elaborate societies that exist today. Without language, the communication of these highly developed, complex ideas becomes impossible, as demonstrated by the hindered communication skills of those deprived of linguistic input in their formative years [18] . Those lacking sufficient linguistic input, like children who are severely abused and isolated, or deaf people surrounded by exclusively non-signing, hearing people, can develop basic communication skills, but they are normally limited to gestures and lack the linguistic skills necessary to communicate intricate thoughts and messages. Because of the universality of communication, the discoveries made in the field of linguistics reach far deeper than the field itself. The neurological basis of language in humans is only now beginning to be unveiled due to crucial technological advancements, and the next century will bring countless more discoveries.

- Campbell, L., “The History of Linguistics.” The Handbook of Linguistics , 30 Nov. 2007, doi:10.1111/b.9781405102520.2002.00006.x.

- Chang, E.F., et al. “Contemporary Model of Language Organization: an Overview for Neurosurgeons.” Journal of Neurosurgery, Feb. 2015, pp. 250–261., doi:10.3171/2014.10.jns132647.

- Friederici, A. D. “The Brain Basis of Language Processing: From Structure to Function.” Physiological Reviews, vol. 91, no. 4, 1 Oct. 2011, pp. 1357–1392., doi:10.1152/physrev.00006.2011.

- Hickok, G., “The Cortical Organization of Speech Processing: Feedback Control and Predictive Coding the Context of a Dual-Stream Model.” Journal of Communication Disorders, vol. 45, no. 6, 2012, pp. 393–402., doi:10.1016/j.jcomdis.2012.06.004.

- Upadhyay, J., et al. “Effective and Structural Connectivity in the Human Auditory Cortex.” Journal of Neuroscience, vol. 28, no. 13, 2008, pp. 3341–3349., doi:10.1523/jneurosci.4434-07.2008.

- Griffiths, Timothy D., and Warren J. D.. “The Planum Temporale as a Computational Hub.” Trends in Neurosciences, vol. 25, no. 7, 11 Oct. 2002, pp. 348–353., doi:10.1016/s0166-2236(02)02191-4.

7: Hickok, G., Bellugi, U., & Klima, E. S. (2001). Sign Language in the Brain. Scientific American, 284(6), 58-65. doi:10.1038/scientificamerican0601-58

8: Neville, H. J., Bavelier, D., Corina, D., Rauschecker, J., Karni, A., Lalwani, A., . . . Turner, R. (1998). Cerebral organization for language in deaf and hearing subjects: Biological constraints and effects of experience. Proceedings of the National Academy of Sciences, 95(3), 922-929. doi:10.1073/pnas.95.3.922

9: Fromkin, V. A. (1988). Sign Languages: Evidence for Language Universals and the Linguistic Capacity of the Human Brain. Sign Language Studies, 1059(1), 115-127. doi:10.1353/sls.1988.0027

10: Campbell, R., MacSweeney, M., & Waters, D. (2008). Sign Language and the Brain: A Review. Journal of Deaf Studies and Deaf Education, 13 (1), 3-20. doi: 10.1093/deafed/enm035.

- Gell M., Murray R., Merritt. (2011). “The origin and evolution of word order.” Proceedings of the National Academy of Sciences of the United States of America. 108., doi: 17290-5. 10.1073/pnas.1113716108.

- Dryer, M. S. “On the Six-Way Word Order Typology.” Studies in Language, vol. 37, no. 2, 2013, pp. 267–301., doi:10.1075/sl.37.2.02dry

13: Bornkessel, I., and Schlesewsky, M., “The Extended Argument Dependency Model: A Neurocognitive Approach to Sentence Comprehension across Languages.” Psychological Review, vol. 113, no. 4, Oct. 2006, pp. 787–821., doi:10.1037/0033-295x.113.4.787.

14: Grossman, R. B., & Shepard-Kegl, J. A. (2006). To Capture a Face: A Novel Technique for the Analysis and Quantification of Facial Expressions in American Sign Language. Sign Language Studies, 6(3), 273-305. doi:10.1353/sls.2006.0018

- Shen, W., et al. “The Roles of the Temporal Lobe in Creative Insight: an Integrated Review.” Thinking & Reasoning, vol. 23, no. 4, 2017, pp. 321–375., doi:10.1080/13546783.2017.1308885.

- Embick, D., et al. “A Syntactic Specialization for Brocas Area.” Proceedings of the National Academy of Sciences 97, no. 11 (2000): 6150-154. doi:10.1073/pnas.100098897.

- Rogalsky, C., “Brocas Area, Sentence Comprehension, and Working Memory: an FMRI Study.” Frontiers in Human Neuroscience, vol. 2, 2008, doi:10.3389/neuro.09.014.2008.

- Macsweeney, M., et al. “Phonological Processing in Deaf Signers and the Impact of Age of First Language Acquisition.” NeuroImage, vol. 40, no. 3, 2008, pp. 1369–1379., doi:10.1016/j.neuroimage.2007.12.047.