Introduction

Hunters of the night, unseen during the day, bats are some of the most interesting creatures on the planet. Making up roughly a quarter of all mammal species, they are remarkably similar to humans. Some can live decades just like us; others live together in communities. Given that both bats and humans are mammals, our auditory systems’ construction and anatomy are also much the same. Yet, with just a few modifications, bats have evolved the extraordinary adaptation of echolocation, some to the extent where they do not require vision [1]. This important ability is what allows them to hunt in the dead of night, giving them access to one of the most abundant ecological niches on this earth.

Echolocation is a remarkable neurological feat. Bats are able to derive a treasure trove of information about their surroundings from sonic rather than visual cues. To accomplish this, their brains must solve a fascinating computational problem. We will explore in detail what kinds of signals bats pay attention to and how these enable them to perceive their environment.

Some echolocating bats have little use for their eyes; similarly, visually impaired humans have also been able to develop the ability of echolocation in no small part due to our brain’s amazing neuroplasticity, its ability to rewire itself. We’ll see that echolocating bats and humans have extremely similar neural circuitry, reflecting our similar evolutionary ancestry. Finally, we’ll take a look at what echolocation “feels like,” and assess what this means neurologically.

Echolocation Mechanics

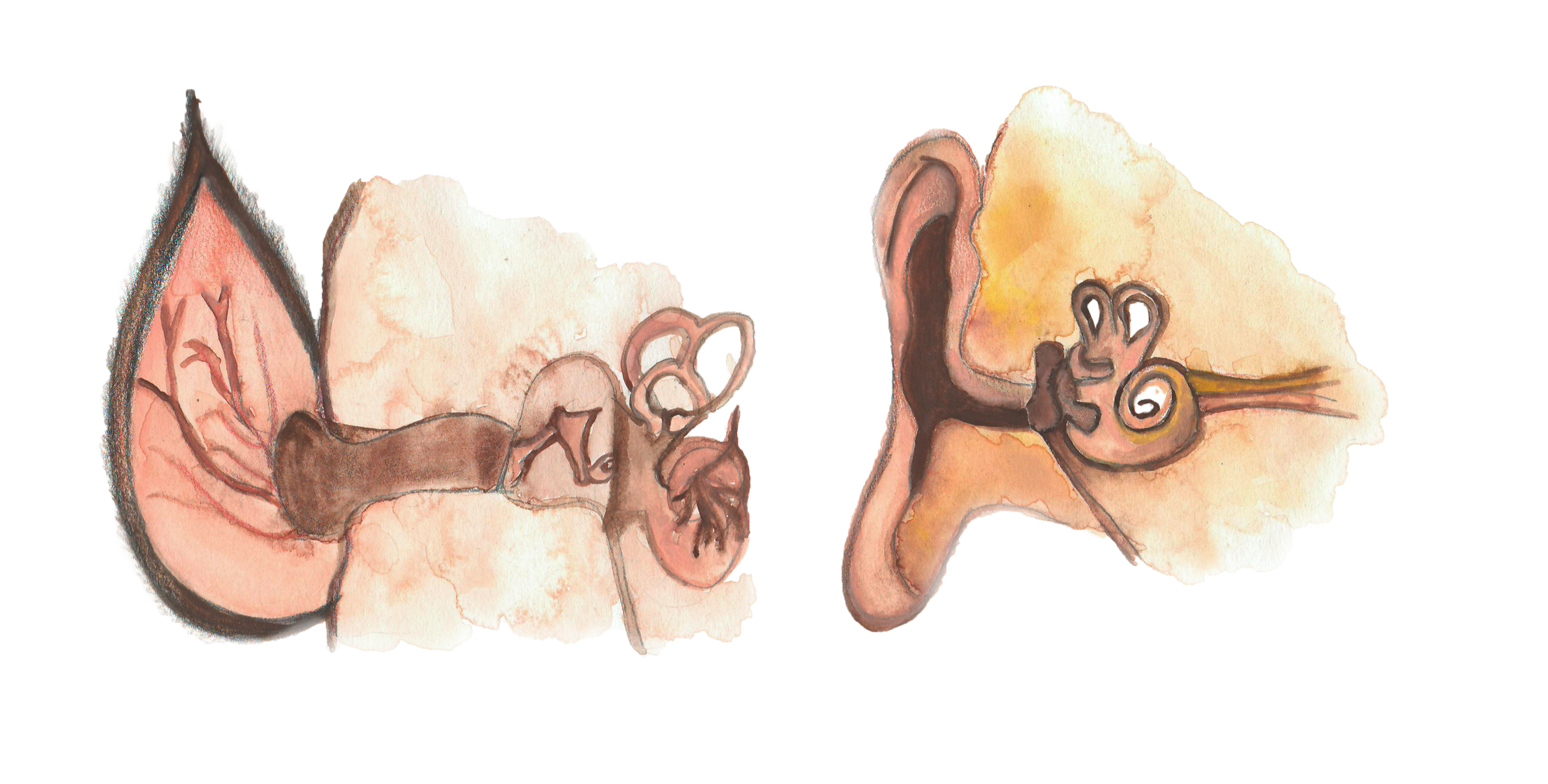

Echolocation allows bats to gather spatial information about their surrounding environment through their sense of sound. Bats vocalize calls, mostly at pitches far beyond the range of human hearing, and get feedback about their surroundings based on the echoes their calls make off of nearby objects. A typical echo is first detected by the cochlea in a bat’s inner ear, and these signals are transmitted to the brainstem, then to the midbrain, and finally to the auditory cortex [2]. By the time auditory signals reach the auditory cortex, neuron firing represents objects rather than auditory parameters like pitch and loudness. Auditory signals travel in this same way throughout other mammals, including humans, suggesting that this neural pathway has been preserved through the course of evolution. Generally, the major difference between bats and other animals is that these regions are enlarged, rather than that they have more complex circuitry [2].

When a bat makes a call, different objects in its environment reflect vibrations from the call in different ways, depending on what they are made of. Yet, with this relatively small difference in vibration, bats’ auditory systems model features of these objects, such as size, distance, and location relative to the bat. We can think of echolocation as a video camera in darkness: a louder call would be analogous to increasing the light provided to the camera so that it can see better. Similarly, increasing the frequency of calls would be equivalent to increasing the frame rate of a camera so that it can better represent the trajectory of objects moving in the environment.

Bats’ “frame rates” are extremely high: many produce over 200 calls per second as they close in on their prey, and their auditory systems are fast enough to analyze each call individually [3]. Additionally, some bats can produce calls of up to 140 dB, which is loud enough to cause permanent damage to human ears [4]. In order to avoid damaging their own ears from these deafening calls, approximately 6-8 milliseconds before a call, a muscle in their inner ear contracts to separate the bones that conduct sound through the ear. This renders them deaf until 2-8 ms after the call ends, just in time to hear the echoes that are so crucial to their survival [5].

Despite their loud calls, bats have extremely precise hearing sensitivity, and their cochleae are so well tuned that they can discriminate down to frequencies within 0.1 Hz from each other (at best, humans can do 3-4 Hz) [6; 7]. Thus, bats can get incredibly accurate and high resolution auditory feedback about their surrounding environments. This is analogous to a camera with an extremely high quality lens and top notch hardware.

The Echoic Code

However, a camera needs a chip to convert this sensor input into a meaningful image. That chip is the brain. Our human brains compute features of our three dimensional surroundings through the sense of vision, while bats get information about their space from sound. This presents us with an interesting computational problem: how do bats identify spatial features of their surroundings through their ears?

As humans, we have the ability to determine the general direction a sound comes from. Since sound waves travel at a fixed speed, they will often reach one ear before the other. The brain can assess which ear received sound first and how long afterwards the other ear received the same sound. It then uses this information to determine the approximate horizontal direction a sound comes from. Bats use this same method to determine a sound’s horizontal direction. To determine vertical direction, our ears are shaped to make higher pitches from an object slightly louder to us if that object is vertically higher up [8]. Similarly, bats’ ears have special appendages, called tragi, which extract frequencies and sounds necessary to analyze vertical direction [9]. In order to effectively localize prey, bats also need to know how far away the prey is in a certain direction. Because sound travels at a fixed speed, the longer an echo takes to arrive after the call, the further away the object is. Using computations for these three dimensions (distance, horizontal angle, and vertical angle), bats can map the locations of objects throughout their surroundings.

With these mapping abilities, bats can also begin to do more complex object recognition. Because similar objects echo in similar ways based on their molecular identities, if a bat hears an echo corresponding to a particular object, such as a leaf, in a range of horizontal angles, they can infer a tree likely takes up that space. However, because bats identify objects based on unique echoes of the materials, they do not rely on size to identify objects. For example, a small tree and a large tree are still recognized as trees because they have echoes corresponding to leaves. These adaptations and many more make bats extremely effective hunters, resulting in a huge diversity of echolocating species.

An Attainable Ability

As amazing as echolocation seems, it’s not quite as unique to bats as we might think. Auditory genes for echolocation have actually evolved separately in birds, whales, and dolphins [11]. Most interesting in this regard is the fact that some blind humans have developed the ability to echolocate in the absence of vision.

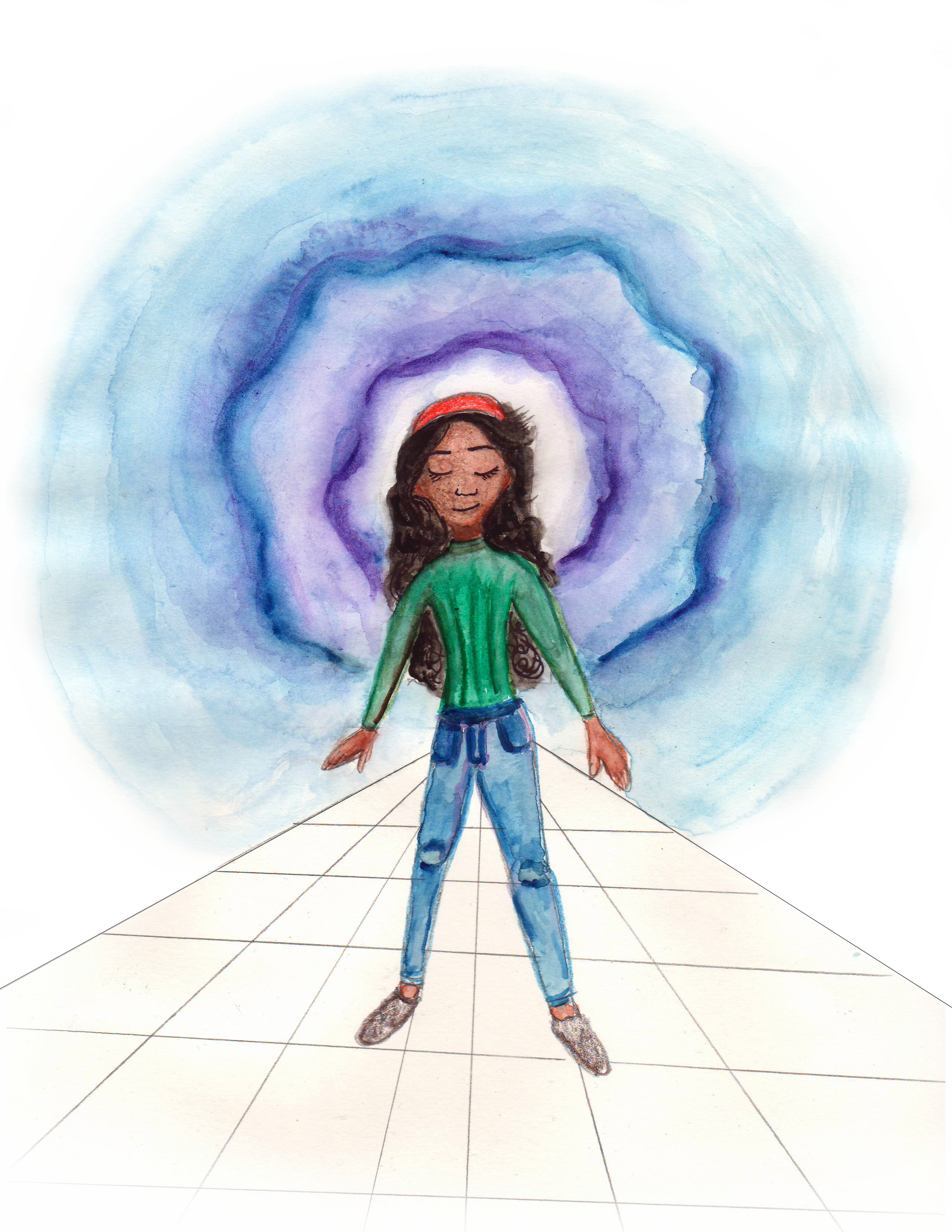

A 2011 case study centers around two blind human echolocators, who have learned to generate clicks between the tongue and the mouth palate in order to echolocate just like bats [12]. Scientists used the fMRI brain scanning technique to understand which brain regions were most active during echolocation and gain a better understanding of its neural mechanisms in humans. To their surprise, they found no difference in auditory cortex activity or hearing ability between these two individuals and humans with vision. However, one brain region, called the calcarine cortex, seemed to have more activity in these two subjects compared to non-echolocating blind and seeing subjects. This region also showed more activity when echolocating subjects were presented with sounds that contained echoes compared to sounds that contained no echoes. Although traditionally thought of as a visual region, the calcarine cortex may have shifted its function to echolocation in the absence of vision, reflecting the human brain’s remarkable neuroplastic capabilities. It showed greater activity in the subject who had been echolocating for a longer period of time, supporting the hypothesis that echolocation is a skill that can be developed and improved [12].

Despite the involvement of a region normally involved in visual processing, these two subjects did not perceive “visual” images [1]. When echolocating, they were repurposing this region to hear, rather than see, their surroundings. This is one of the most remarkable instances of neuroplasticity in existence. The fact that humans are able to environmentally develop an ability that bats developed evolutionarily suggests that rather than having a mechanistically different auditory system from us, bats simply have certain features that are more pronounced. Furthermore, in a matter of months, our brain’s amazing neuroplastic capabilities are able to bridge the auditory gap evolution brought about in our species over millions of years [1].

Daniel Kish, a blind individual from Southern California, founded an organization to teach echolocation to the blind. Though he has been blind since age one, he developed particularly effective echolocation abilities. With his skills, he is now able to discern features of faraway buildings and nearby objects, and is quite capable on a bicycle. Rather than simply “helping” the blind, this approach empowers them by providing them with another method of learning about their surroundings.

Conclusion

Studying echolocation in bats may help illuminate how humans today echolocate and has great potential in its ability to assist in blind navigation. Studying echolocation in humans helps us improve our understanding of neuroplasticity. Studying bats and humans together allows us to investigate a very interesting thought experiment about how nature and nurture can develop the same ability. Answering these questions will lead us to a deeper understanding of mammalian perception, neuroplasticity, and the broader interactions between evolution and the environment.

References

- Fenton, M. B., Grinnell, A. D., Popper, A. N., & Fay, R. R. (2016). Bat Bioacoustics (1st ed., Vol. 54). New York, NY: Springer-Verlag. doi:10.1007/978-1-4939-3527-7

- Covey, E. (2005). Neurobiological specializations in echolocating bats. The Anatomical Record Part A: Discoveries in Molecular, Cellular, and Evolutionary Biology, 287A(1), 1103–1116. doi:10.1002/ar.a.20254

- Ratcliffe, J. M. (2009). Predator-prey interaction in an auditory world. Cognitive Ecology II, 201–225. doi:10.7208/chicago/9780226169378.003.0011

- Surlykke, A. K. V., & Kalko, E. K. V. (2008). Echolocating bats cry out loud to detect their prey. PLOS ONE, 3(4), e2063. doi:10.1371/journal.pone.0002036

- Jen, P., & Suga, N. (1976). Coordinated activities of middle-ear and laryngeal muscles in echolocating bats. Science, 191(4230), 950–952. doi:10.1126/science.1251206

- Simmons, A. M., Hom, K. N., Warnecke, M., & Simmons, J. A. (2016). Broadband noise exposure does not affect hearing sensitivity in big brown bats (Eptesicus fuscus). The Journal of Experimental Biology, 219(7), 1031–1040. doi:10.1242/jeb.135319

- Kulkarni, S. (2001). Ele 201 Introduction to Electrical Signals and Systems: The Human Auditory and Visual Systems.

- Purves, D., Augustine, G. J., Fitzpatrick, D., Katz, L. C., LaMantia, A.-S., McNamara, J. O., & Williams, S. M. (2001). Neuroscience (2nd ed.). Sunderland, MA: Sinauer Associates.

- Wotton, J. M., & Simmons, J. A. (2000). Spectral cues and perception of the vertical position of targets by the big brown bat, Eptesicus fuscus. The Journal of the Acoustical Society of America, 107(2), 1034–1041. doi:10.1121/1.428283

- Prat, Y., Taub, M., & Yovel, Y. (2016). Everyday bat vocalizations contain information about emitter, addressee, context, and behavior. Scientific Reports, 6(1), 39419. doi:10.1038/srep39419

- Shen Y. Y., Liang L., Li G. S., Murphy R. W., Zhang Y. P. (2012). Parallel evolution of auditory genes for echolocation in bats and toothed whales. PLOS Genetics, 8(6), e1002788. doi: 10.1371/journal.pgen.1002788.

- Milne, J. L., Arnott, S. R., Kish D., Goodale, M. A., Thaler, L. (2015). Parahippocampal cortex is involved in material processing via echoes in blind echolocation experts. Vision Research, 109B, 139-148. doi: 10.1016/j.visres.2014.07.004