In a popular demonstration video by Christopher Chabris and Daniel Simons, viewers are asked to count the number of times a team in white shirts passes a basketball. But as they focus on the task, only half of the participants notice something out of the ordinary [1].

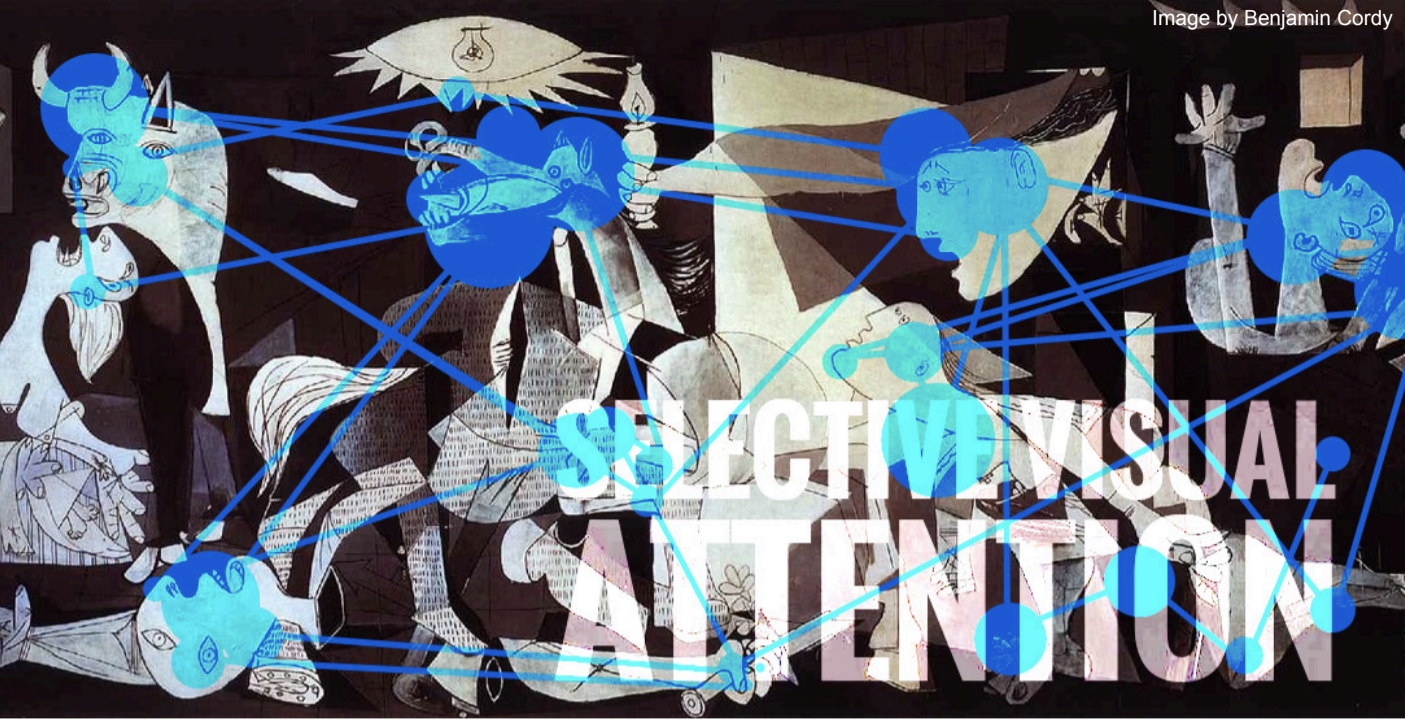

This tendency to overlook extraneous information in our cluttered visual environment is referred to as selective attention. It involves two basic but separate proceedings: information processing and information filtering. At any one moment, only a fraction of information sent from the retina to the brain can be processed and acted upon, and of what is processed, typically an even smaller amount is attended to. As a result, people are primarily aware of accentuated stimuli, leaving other objects to sit relatively unnoticed within their field of vision [2].

In the aforementioned video, emphasis was placed on the team in white, drawing attention to them. As a result, when asked about the unattended stimulus (players in black), it became significantly harder to recall what took place. Even more striking, the vast majority of viewers completely miss the giant gorilla that walks through the frame, because their attention was focused solely on the players in white [1].

Traditionally, selective visual attention has been assumed to follow a “spotlight” model in which humans focus on processing one area in their visual field, and draw information from that particular area [3]. Recent studies, however, have presented strong evidence promoting a theory of “competition” among stimuli within the broader visual field for the precious commodity of working memory. Any stimuli out of the ordinary or specifically looked for are then analyzed, eating up working memory, while the other extraneous information is left unanalyzed taking less memory to process [2].

Fighting for Working Memory

Due to the limited capacity of working memory, the human brain is unable to simultaneously focus on a large number of objects within its visual field. In a series of simple experiments that demonstrate this inability, two objects are presented simultaneously in a visual field for a brief moment [4]. Afterward, subjects are asked about some property of the objects, such as size, brightness, or shape.

Subject responses reveal crucial results. First, dividing attention between two objects results in poorer performance than focusing on just one. Second, processing is bottlenecked at the level of stimulus input; showing one object after the other with a slight delay results in much better performance [4]. Finally, the ability to process stimuli is mostly unrelated to the distance between the objects, suggesting humans do not spotlight one area of their visual field to process information, as previously supposed [4].

These assertions were first established empirically by Donderi and Zelnicker in 1969 when they presented one target among a number of nontargets for a subject to identify [3]. In the study, “easy” cases were distinguished from “hard” ones. In easy cases, the target is clearly distinct from the others (a white square among a number of black circles, for example). Hard cases, on the other hand, featured targets that shared a variety of properties with the nontargets (e.g. having brightness, size, and shape in common).

In the easy cases, nontargets provide weak competition for drawing attention toward them, as they are clearly distinct from the target itself. Given more factors in common, however, it becomes significantly harder to pick out the target over nontargets. This shows that the unconscious bias towards uniqueness is just as important as purposefully-drawn attention in the visual field [4].

Bottom-up Bias and Top-down Control

As alluded to before, the current model of selective visual attention asserts that the targets and nontargets in a field of vision compete for working memory [5]. The bottom-up bias states that unique targets are easily identified among a number of homogeneous nontargets. Similarly, novel stimuli that enter a field of vision attract attention, and therefore processing power. In this way bottom-up bias can be considered to function as a series of largely automatic processes.

Bottom-up bias may be the result of nontargets being stored in memory as the context or background against which the target is compared. There may also be related biases toward sudden appearances of new objects in the visual field, or toward objects that are larger, brighter, or faster. In such cases of stimulus-driven bias, the target seems to “pop out” of the background of homogeneity [2].

But just as target selection is dependent on bottom-up bias, top-down control is needed to process the information that is relevant to current behavior. For instance, attention can be purposefully drawn to one property of a number of shapes, or a discriminable colored target in a multicolored display. Only when targets are not easily discriminable does it become more difficult to distinguish between them and nontargets [5]. In a given visual field, our attention is naturally drawn to objects that stand out from the background in some way, but it can also be artificially drawn to what we want to focus on. When those two ideas conflict, such as when we are looking for objects that do not stand out in our visual field, they become harder to locate and distinguish [5].

One question up for debate in this current model of selective attention is the reason behind competition for focus within the brain. There are a few theories as to why this might be. For one, it may be possible that full visual analysis of every object in a scene would be too complex for the brain to handle – thus competition between objects is a result of limited identification capacity [5]. However, an equally strong theory proposes that a lack of control over response systems is the cause. Indeed, it was demonstrated by Eriksen and Eriksen in 1974 that subjects’ attentions are drawn to objects that they have specifically been told to ignore [6]. Regardless, competition likely occurs at multiple levels between sensory input and motor output [7].

Neural Processes

There are several limitations at the sensory input level that make competition for memory crucial. Objects within a visual field compete for processing in a network of more than thirty cortical visual areas, which are organized into two main corticocortical streams which both begin in the primary visual cortex (V1). From there, a ventral stream is projected into the inferior temporal cortex for object recognition while a dorsal stream is projected into the posterior parietal cortex for spatial perception and visuomotor performance. Both eventually are projected into the prefrontal cortex [8].

As competition involves object recognition, the ventral stream is the key to selective attention. The pathway includes mainly the extrastriate visual cortical areas V2 and V4, as well as TEO and ending in TE in the inferior temporal cortex. As visual information progresses through the ventral stream, the manner in which that information is processed grows more and more complex. For instance, V1 neurons act as filters for both distance and time, V2 neurons respond to visual contours, and inferior temporal neurons respond selectively to overall object features [8].

Additionally, the receptive field of visual neurons – the area within a visual field that each neuron responds to – increases at each stage. Moving down the pathway from V1 to V4 to TEO to TE, typical receptive fields are on the order of 0.2, 3.0, 6.0, and 25.0 degrees in size respectively.

The receptive fields are seen as a crucial processing resource that objects in the visual field compete for, and as object features are coded into the ventral stream, the information available about any specific target declines as more objects are added to receptive fields. As a means of focusing attention, the visual system splits processing between the targets, and relevance is assigned to objects through either top-down or bottom-up processes to help decide which ones to focus on[9].

Conclusions

Although the human retina is bombarded by information, only a select amount is processed and acted upon at any one moment. At several instances between input and output, objects in the visual field “compete” for limited processing power in the ventral stream of visual information. The competition is biased by both bottom-up (distinguishing objects and background) and top-down (selecting targets relevant to current behavior) processes. Thus attention acts as less of a “spotlight” on certain areas within the visual field, but rather as a series of interactions between the objects themselves as they compete for processing.

References

- Simons, Daniel. "Evidence For Preserved Representations In Change Blindness." Consciousness and Cognition 11, no. 1 (2002): 78-97.

- Desimone, Robert, and John Duncan. "Neural Mechanisms of Selective Visual Attention." Annual Review of Neuroscience 18, no. 1 (1995): 193-222.

- Donderi, Don, and Dorothy Zelnicker. "Parallel processing in visual same-different decisions." Perception & Psychophysics 5, no. 4 (1969): 197-200.

- Donderi, Don, and Bruce Case. "Parallel visual processing: Constant same-different decision latency with two to fourteen shapes." Perception & Psychophysics 8, no. 5 (1970): 373-375.

- Deco, Gustavo, and Josef Zihl. "Top-down selective visual attention: A neurodynamical approach." Visual Cognition 8, no. 1 (2001): 118-139.

- Eriksen, Barbara, and Charles Eriksen. "Effects of noise letters upon the identification of a target letter in a nonsearch task." Perception & Psychophysics 16, no. 1 (1974): 143-149.

- Fockert, J. W. De. "The Role of Working Memory in Visual Selective Attention." Science 291, no. 5509 (2001): 1803-1806.

- Treisman, Anne M.. "Strategies And Models Of Selective Attention.." Psychological Review 76, no. 3 (1969): 282-299.

- Fox, Elaine. "Perceptual grouping and visual selective attention." Perception & Psychophysics 60, no. 6 (1998): 1004-1021.